How to choose a server? How to choose a server based on your data center’s needs?

Nowadays, in an effort to optimize performance in the enterprise, IT should evaluate top priorities to determine how to choose a server and create the most efficient workloads.

We’d like to share some key considerations when selecting a server based on your data center’s needs.

The guide below was written by Stephen J. Bigelow on searchdatacenter.techtarget.com

Servers are the heart of modern computing, but the contemplation around how to choose a server to host a workload can sometimes create a bewildering array of hardware choices. While it’s possible to fill a data center with identical, virtualized and clustered white box systems that are capable of managing any workload, the cloud is changing how organizations run applications. As more companies deploy workloads in the public cloud, local data centers require fewer resources to host the workloads that remain on premises. This is prompting IT and business leaders to seek out more value and performance from the shrinking server fleet.

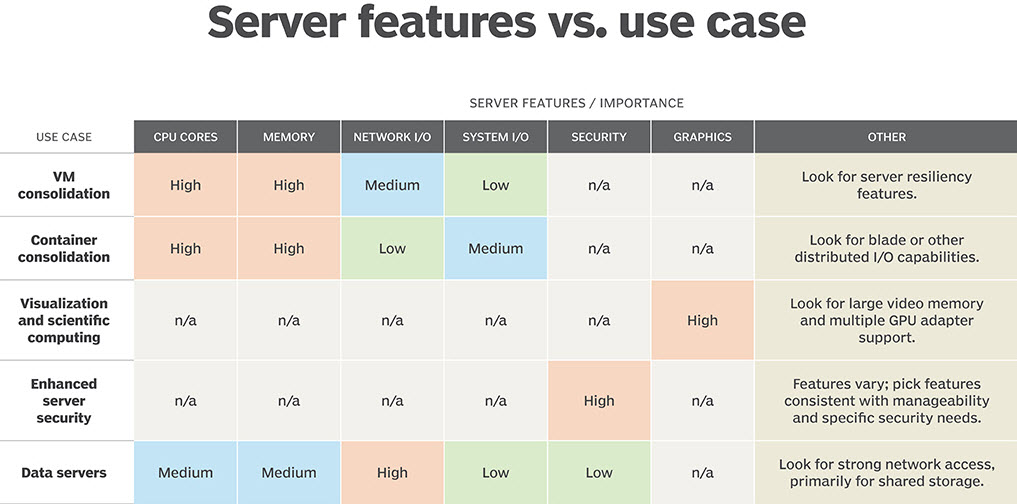

Today, the expansive sea of white box systems is challenged by a new wave of specialization with server features. Some organizations are rediscovering the notion that one server may indeed fit all. But you can select or even tailor server cluster hardware to accommodate particular usage categories.

VM consolidation and network I/O add benefits

A central benefit of server virtualization is the ability to host multiple VMs on the same physical server in order to utilize more of a server’s available compute resources. VMs primarily rely on server memory (RAM) and processor cores. It’s impossible to determine precisely how many VMs can reside on a given server because you can configure VMs to use a wide range of memory space and processor cores. However, the rule of thumb on servers includes selecting one with more memory and processor cores will typically allow more VMs to reside on the same server, which improves consolidation.

For example, a Dell EMC PowerEdge R940 rack server can host up to 28 processor cores and offers 48 double data rate 4 (DDR4) dual inline memory module (DIMM) slots that support up to 6 TB of memory. Some organizations may choose to forego individual rack servers in favor of blade servers for an alternative form factor or as part of hyper-converged infrastructure systems. Servers intended for high levels of VM consolidation should also include resiliency server features, such as redundant hot-swappable power supplies, and resilient memory features, such as DIMM hot swap and DIMM mirroring.

A secondary consideration on how to choose a server for highly consolidated purposes is the added attention to network I/O. Enterprise workloads routinely exchange data, access centralized storage resources, interface with users across the LAN or WAN and so on. Network bottlenecks can result when multiple VMs attempt to share the same low-end network port. Consolidated servers can benefit from a fast network interface, such as a 10 Gigabit Ethernet port, though it is often more economical and flexible to select a server with multiple 1 GbE ports that you can trunk together for more speed and resilience.

When choosing a server, evaluate the importance of certain features based on the use cases.

Figure from https://searchdatacenter.techtarget.com/tip/How-to-choose-a-server-based-on-your-data-centers-needs

Container consolidation opens up RAM on how to choose a server

Virtualized containers represent a relatively new approach to virtualization that allows developers and IT teams to create and deploy applications as instances that package code and dependencies together — yet containers share the same underlying OS kernel. Containers are attractive for highly scalable cloud-based application development and deployment.

As with VM consolidation, compute resources will have a direct effect on the number of containers that a server can potentially host, so servers intended for containers should provide an ample quantity of RAM and processor cores. More compute resources will generally allow for more containers.

But large numbers of simultaneous containers can impose serious internal I/O challenges for the server. Every container must share a common OS kernel. This means there could be dozens or even hundreds of containers trying to communicate with the same kernel, resulting in excess OS latency that might impair container performance. Similarly, containers are often deployed as application components, not complete applications. Those component containers must communicate with each other and scale as needed to enhance the performance of the overall workload. This can produce enormous — sometimes unpredictable — API traffic between containers. In both cases, I/O bandwidth limitations within the server itself, as well as the application’s architectural design efficiency, can limit the number of containers a server might host successfully.

Network I/O can also pose a potential bottleneck when many containerized workloads must communicate outside of the server across the LAN or WAN. Network bottlenecks can slow access to shared storage, delay user responses and even precipitate workload errors. Consider the networking needs of the containers and workloads, and configure the server with adequate network capacity — either as a fast 10 GbE port or with multiple 1 GbE ports, which you can trunk together for more speed and resilience.

Most server types are capable of hosting containers, but organizations that adopt high volumes of containers will frequently choose blade servers to combine compute capacity with measured I/O capabilities, spreading out containers over a number of blades to distribute the I/O load. One example of servers for containers is the Hewlett Packard Enterprise (HPE) ProLiant BL460c Gen10 Server Blade with up to 26 processor cores and 2 TB of DDR4 memory.

Visualization and scientific computing affect how to choose a server

Graphics processing units (GPUs) are increasingly appearing at the server level to assist in mathematically intensive tasks ranging from big data processing and scientific computing to more graphics-related tasks, such as modeling and visualization. GPUs also enable IT to retain and process sensitive, valuable data sets in a better-protected data center rather than allow that data to flow to business endpoints where it can more easily be copied or stolen.

Generally, the support for GPUs requires little more than the addition of a suitable GPU card in the server — there is little impact on the server’s traditional processor, memory, I/O, storage, networking or other hardware details. However, the GPU adapters included in enterprise-class servers are often far more sophisticated than the GPU adapters available for desktops or workstations. In fact, GPUs are increasingly available as highly specialized modules for blade systems.

For example, the HPE ProLiant WS460c Gen9 Graphics Server Blade uses Nvidia Tesla M60 Peripheral Component Interconnect Express graphics cards with two GPUs, 4,096 Compute Unified Device Architecture cores and 16 GB of graphics DDR5 separate video RAM. The graphics system touts support for up to 48 GPUs through the use of multiple graphics server blades. The large volume of supported GPU hardware–especially when GPU hardware is also virtualized–allows many users and workloads to share the graphics subsystem.

Info from https://searchdatacenter.techtarget.com/tip/How-to-choose-a-server-based-on-your-data-centers-needs

More Related

Configuring the hpe proliant dl380 gen9 24 sff cto server as a vertica node

Use Cases: Cisco UCS S3260 Storage Server with MapR Converged Data Platform and Cloudera Enterprise